WIP - Privacy-preserving machine learning: Methods, challenges and directions#

Note

Hey guys, this is my personal reading note. I am not sure there might be some mistakes in my understanding. Please feel free to correct me (hsiangjenli@gmail.com) if you find any. Thanks!

Publish Year : 2021

Authors : Xu, Baracaldo, and Joshi

Before starting#

Before starting to read the paper, the basic concepts you need to know are as follows:

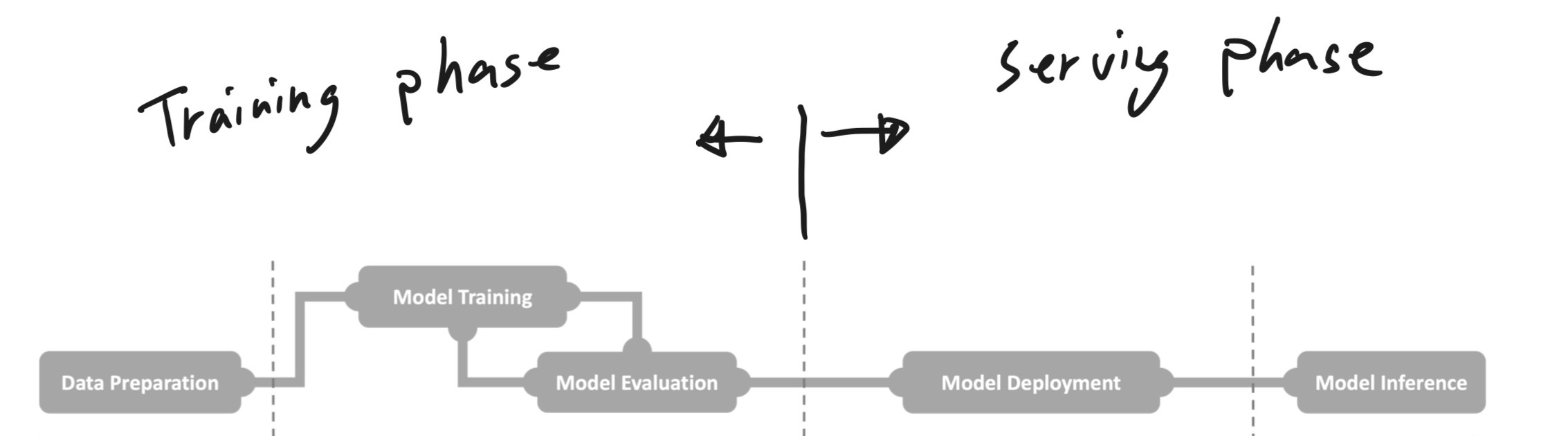

Entire ML pipeline process

The participants in the ML pipeline

Key Terms#

Privacy-preserving machine learning (PPML)

Complete Model \(\rightarrow\) Train on single machine

Global Model \(\rightarrow\) Train on multiple machines

Data Producer (DP)

Model Consumer (MC)

Computational Facility (CF)

Confidential-level privacy

Homomorphic encryption (HE)

Functional encryption (FE)

Differential privacy

Multi-party computation (MPC)

Secure multi-party computation (SMPC)

Garbled circuit

Oblivious transfer

Contributions [1]#

Existing privacy preserving approaches

Proposed an evaluation framework for PPML, which decomposes privacy-preserving features into distinct Phase, Guarantee, and Utility aspects (PGU).

Phase : Represents the use of privacy-preserving techniques at different stages in the ML pipeline

Guarantee : In specific scenarios, privacy-preserving techniques provide certain levels of privacy protection

Utility : The impact of privacy-preserving techniques on the model’s performance

Phases of ML Pipeline#

Privacy Preserving Data Preparation (Data Perspective)#

Privacy Preserving Model Training (Computational Perspective)#

Supporting computation on encrypted data [1]. Typically, encryption techniques involve two main steps: encoding and decoding [1].

Encoding \(\rightarrow\) Transform floating-point values into integers

Decoding \(\rightarrow\) Recover the floating-point values from trained model or crypto-based training results

Privacy Preserving Model Serving (Model Perspective)#

Include model deployment and inference [1]

Private aggregation of teacher ensembles (PATE)

Model transform

Model compression

Privacy Guarantee#

Object-Oriented Privacy Guarantee

Data oriented privacy guarantee : Prevent the leakage of data, but it will sacrifice of the data utility [1]

Anonymization mechanism needs to aggregate and remove proper feature values. Simultaneously, certain values of quasi-identifier features are erased altogether

Differential privacy requires the addition of a noise budget to the data sample.

Encrypted data may ensure the dataset’s confidentiality, it brings extra processing burden to the subsequent machine learning training.

Model oriented privacy guarantee : Prevent adversaries from extracting private information through repeated model queries [1]

Perturb the trained model

DP-SGD [19] : Adding noise into the clipped gradients to achieve a differentially private model

Regulate the model access times and patterns

Pipeline-Oriented Privacy Guarantee

References#

Runhua Xu, Nathalie Baracaldo, and James Joshi. Privacy-preserving machine learning: methods, challenges and directions. arXiv preprint arXiv:2108.04417, 2021.

Latanya Sweeney. K-anonymity: a model for protecting privacy. International journal of uncertainty, fuzziness and knowledge-based systems, 10(05):557–570, 2002.

Ashwin Machanavajjhala, Daniel Kifer, Johannes Gehrke, and Muthuramakrishnan Venkitasubramaniam. L-diversity: privacy beyond k-anonymity. Acm transactions on knowledge discovery from data (tkdd), 1(1):3–es, 2007.

Ninghui Li, Tiancheng Li, and Suresh Venkatasubramanian. T-closeness: privacy beyond k-anonymity and l-diversity. In 2007 IEEE 23rd international conference on data engineering, 106–115. IEEE, 2006.

Mengwei Yang, Linqi Song, Jie Xu, Congduan Li, and Guozhen Tan. The tradeoff between privacy and accuracy in anomaly detection using federated xgboost. arXiv preprint arXiv:1907.07157, 2019.

Tian Li, Zaoxing Liu, Vyas Sekar, and Virginia Smith. Privacy for free: communication-efficient learning with differential privacy using sketches. arXiv preprint arXiv:1911.00972, 2019.

Farzin Haddadpour, Belhal Karimi, Ping Li, and Xiaoyun Li. Fedsketch: communication-efficient and private federated learning via sketching. arXiv preprint arXiv:2008.04975, 2020.

Cynthia Dwork. Differential privacy: a survey of results. In International conference on theory and applications of models of computation, 1–19. Springer, 2008.

Cynthia Dwork, Guy N Rothblum, and Salil Vadhan. Boosting and differential privacy. In 2010 IEEE 51st annual symposium on foundations of computer science, 51–60. IEEE, 2010.

Cynthia Dwork, Aaron Roth, and others. The algorithmic foundations of differential privacy. Foundations and Trends® in Theoretical Computer Science, 9(3–4):211–407, 2014.

Gilbert Wondracek, Thorsten Holz, Engin Kirda, and Christopher Kruegel. A practical attack to de-anonymize social network users. In 2010 ieee symposium on security and privacy, 223–238. IEEE, 2010.

Md Atiqur Rahman, Tanzila Rahman, Robert Laganière, Noman Mohammed, and Yang Wang. Membership inference attack against differentially private deep learning model. Trans. Data Priv., 11(1):61–79, 2018.

Reza Shokri, Marco Stronati, Congzheng Song, and Vitaly Shmatikov. Membership inference attacks against machine learning models. In 2017 IEEE symposium on security and privacy (SP), 3–18. IEEE, 2017.

Jianwei Qian, Xiang-Yang Li, Chunhong Zhang, and Linlin Chen. De-anonymizing social networks and inferring private attributes using knowledge graphs. In IEEE INFOCOM 2016-The 35th Annual IEEE International Conference on Computer Communications, 1–9. IEEE, 2016.

Masahiro Yagisawa. Fully homomorphic encryption without bootstrapping. Cryptology ePrint Archive, 2015.

Jung Hee Cheon, Andrey Kim, Miran Kim, and Yongsoo Song. Homomorphic encryption for arithmetic of approximate numbers. In Advances in Cryptology–ASIACRYPT 2017: 23rd International Conference on the Theory and Applications of Cryptology and Information Security, Hong Kong, China, December 3-7, 2017, Proceedings, Part I 23, 409–437. Springer, 2017.

Michel Abdalla, Florian Bourse, Angelo De Caro, and David Pointcheval. Simple functional encryption schemes for inner products. In IACR International Workshop on Public Key Cryptography, 733–751. Springer, 2015.

Michel Abdalla, Dario Catalano, Dario Fiore, Romain Gay, and Bogdan Ursu. Multi-input functional encryption for inner products: function-hiding realizations and constructions without pairings. In Advances in Cryptology–CRYPTO 2018: 38th Annual International Cryptology Conference, Santa Barbara, CA, USA, August 19–23, 2018, Proceedings, Part I 38, 597–627. Springer, 2018.

Martin Abadi, Andy Chu, Ian Goodfellow, H Brendan McMahan, Ilya Mironov, Kunal Talwar, and Li Zhang. Deep learning with differential privacy. In Proceedings of the 2016 ACM SIGSAC conference on computer and communications security, 308–318. 2016.